Profiling ESP32 UDP Sends

With my new profiling setup, I decided to test efficiently sending UDP data logs.

With my findings from Making an Embedded Profiler 3: ESP IDF Tools, I decided to stick with using MabuTrace. While it isn’t exactly what I wanted for profiling, it covers all the key features, and in many ways is better than anything I’d end up making.

However, it doesn’t cover the continuous logging use case. While it would generally make more sense to use MQTT, ESP-IDF logging, or some other existing protocol, I wanted to try using my https://github.com/axlan/min-logger since I made it with the exact features I wanted.

For the ESP32, min-logger was still missing a backend for buffering logged data and sending it to the host.

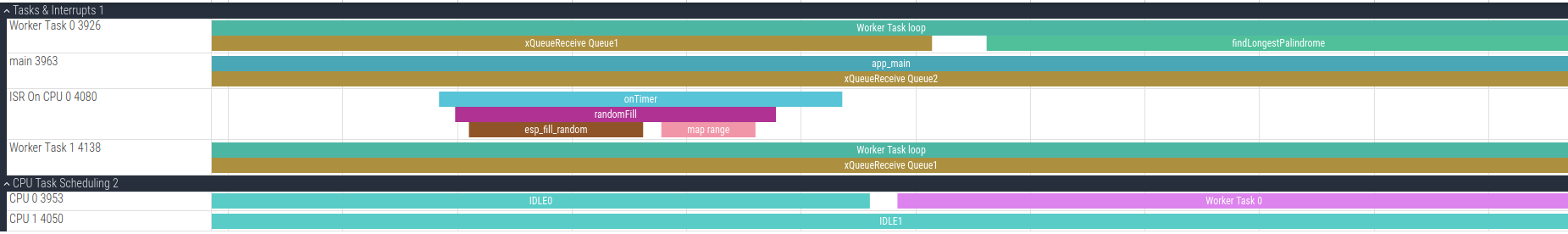

I quickly setup a ESP-IDF framework test application, and began to experiment with different approaches. MabuTrace was great in letting me see exactly what was happening with each approach.

My goal was to set up a system where multiple tasks on multiple cores could write data simultaneously with minimal latency. This data would be buffered and periodically sent to the host over UDP. Since I don’t care about latency and each send has overhead, each send would be the maximum UDP packet size. I wanted to find a way to do this while being efficient with CPU and memory usage.

Code for these tests is found at: https://github.com/axlan/esp32-idf-udp-send-profiling

Data Synchronization

After reading the ESP-IDF documentation, I found the FreeRTOS ring buffer addition. Specifically, I could use it in “byte buffer” mode. This meant I could efficiently write arbitrarily sized chunks from multiple tasks and combine them into a single large read for sending over UDP. Initially, I set it up to drain the ring buffer into an array in the UDP send task. However, I realized that by sizing the buffer to be twice the desired UDP write size, I could effectively double-buffer the data and not need to worry about tearing on the consumer side.

Here’s a little demo below. Note that as long as each read is half the buffer size, the data will always be contiguous.

Ring Buffer Operations (Size: 20)

The issue with implementing this approach is that the FreeRTOS ring buffer addition doesn’t have a way to wait for a certain threshold of data to be available. This meant I needed to poll the buffer to determine when it was full enough to read from.

lwIP UDP Send Options

The first place I looked for an example of efficiently sending UDP packets was the ESP32 Arduino library. It has the AsyncUDP library. This library introduced me to an API I hadn’t seen before: the lwIP raw/callback-style API. I spent considerable time trying to unravel this poorly documented interface. Once I started to understand it, I noticed the esp32-idf lwIP documentation mentioned that this API is not supported and to instead use a similar netconn API. Additionally, even this netconn API is only unofficially supported, and the BSD sockets are the recommended interface.

Regardless, I completed a basic test application with both the BSD and raw APIs:

Even looking at heap usage it isn’t obvious that the raw API has any advantage over the BSD.

This was somewhat surprising since I set up the raw API to perform zero-copy operations:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

static void udp_client_task_raw(void *pvParameters)

{

struct udp_pcb *pcb;

pcb = udp_new();

udp_bind(pcb, IP_ADDR_ANY, 0);

ip_addr_t dest_ip;

dest_ip.type = IPADDR_TYPE_V4;

IP4_ADDR(&dest_ip.u_addr.ip4, 192, 168, 1, 111);

// Allocate a pbuf that will point to a block of read only memory. In this case it will point to a half of the ring buffer being held.

struct pbuf *pbuf = pbuf_alloc(PBUF_TRANSPORT, UDP_MESSAGE_SIZE, PBUF_ROM);

assert(pbuf != NULL);

// This is effectively const, but needs to be mutable to match pbuf typing since it's used for receive calls as well as send.

void *held_data = NULL;

while (1)

{

// Check if UDP send is done. If so return data to ring buffer.

if (held_data != NULL && pbuf->ref == 1)

{

vRingbufferReturnItem(buf_handle, held_data);

held_data = NULL;

}

// Check if a UDP packet's worth of data is ready to send.

if (xRingbufferGetCurFreeSize(buf_handle) <= UDP_MESSAGE_SIZE)

{

size_t read_size = 0;

// By always reading half the buffer size, the read will never be limited by rolling over the end of the buffer.

held_data = xRingbufferReceiveUpTo(buf_handle, &read_size, pdMS_TO_TICKS(portMAX_DELAY), UDP_MESSAGE_SIZE);

assert(read_size == UDP_MESSAGE_SIZE);

pbuf->payload = held_data;

pbuf->tot_len = UDP_MESSAGE_SIZE;

udp_sendto(pcb, pbuf, &dest_ip, PORT);

}

vTaskDelay(10 / portTICK_PERIOD_MS);

}

}

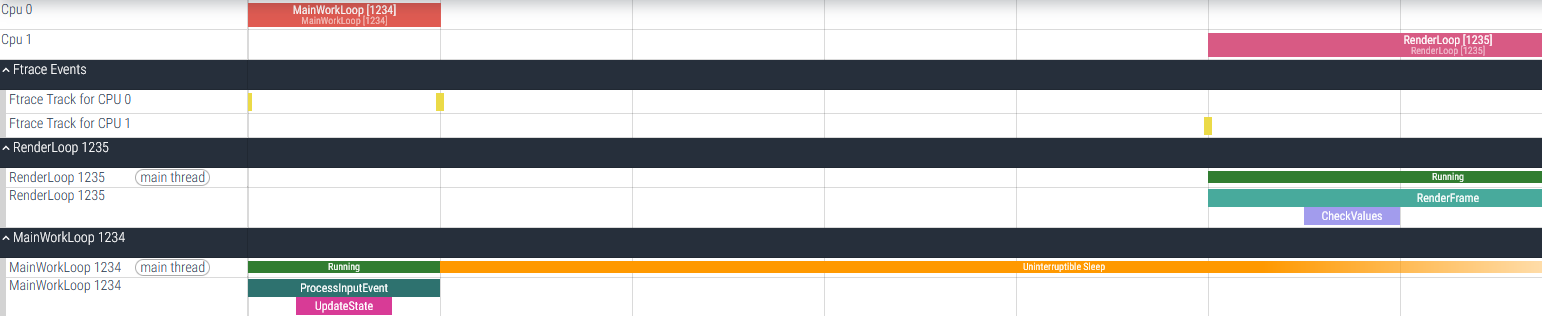

It’s also surprising that for both implementations, the send call usually blocks for 3.5ms with just a single send every couple of seconds.

There are several possibilities for this result:

- As mentioned, the raw API isn’t maintained, so it may be doing something less efficient than the better-supported BSD sockets.

- Since no other data is being sent, each send call transmits the packet immediately. The behavior might be different if data was being queued.

- The BSD socket appears to leverage the tiT (the lwIP background) task. It’s possible it’s using resources that are already allocated there.

- The expected behavior may be for these functions to block to reduce buffer usage.

- There may be more optimal compile-time configuration settings that would improve the raw interface if sockets weren’t being used anywhere.

As a final test, I also implemented this with the netconn API. It ended up performing being fairly similar to the BSD code, passing off the processing to the tiT task.

Here are the three implementations:

- bsd: https://github.com/axlan/esp32-idf-udp-send-profiling/blob/f92c54270bbf05a5d4f08c3ff62366de2720ff9a/src/main.cpp#L226

- netconn: https://github.com/axlan/esp32-idf-udp-send-profiling/blob/f92c54270bbf05a5d4f08c3ff62366de2720ff9a/src/main.cpp#L173

- raw: https://github.com/axlan/esp32-idf-udp-send-profiling/blob/f92c54270bbf05a5d4f08c3ff62366de2720ff9a/src/main.cpp#L124

In the end, for this use case, the BSD sockets make the most sense since they are the simplest and best documented. The only real behavioral advantage I could identify is that the raw implementation spends more time in the user task instead of the lwIP task. This could potentially help the latency of other user tasks since they could more easily preempt it.